AI search doesn’t reward a single “best” content type. It rewards different content formats depending on where the buyer is in their decision journey.

Just like SEO, a strong Generative Engine Optimization program is not built off of a single tactic for all customer segments or journey stages. It’s an integrated system that earns visibility at multiple points in the customer journey, with content designed for different jobs:

- Answering informational questions, before the customer even knows they’re shopping

- Establishing authority and relevance when they know they have a problem

- Winning trust and reducing risk when they’re deciding what to buy

To understand what content formats get cited in AI answers at each customer journey stage, I created a framework of queries across three stages of awareness:

- Problem Unaware: General topic questions or category exploration

“What is content marketing?”

- Problem Aware: A pain point is present, but the buyer isn’t shopping yet

“How do I scale organic campaigns profitably?”

- Solution Aware: The buyer is comparing options and moving toward purchase

“Which are the best content optimization tools?”

Which content types get cited most often across each customer journey stage?

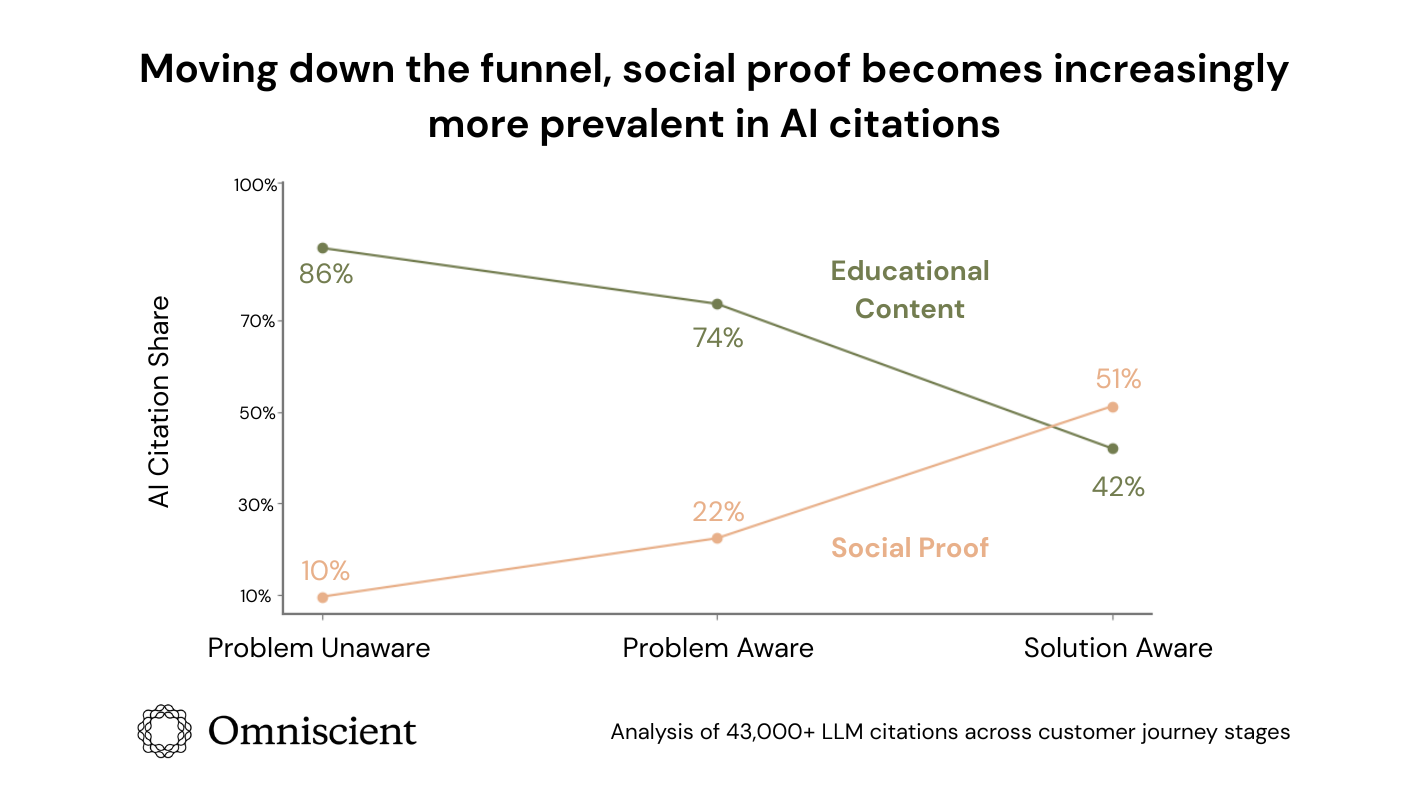

Of course, when buyers ask questions like “What is content marketing”, we expect educational content to win.

But when the prompts introduce terms like “best tools for”, “top products”, or “most reliable app”, LLMs cite social proof more frequently, reflecting the buyer’s shift from learning to evaluating.

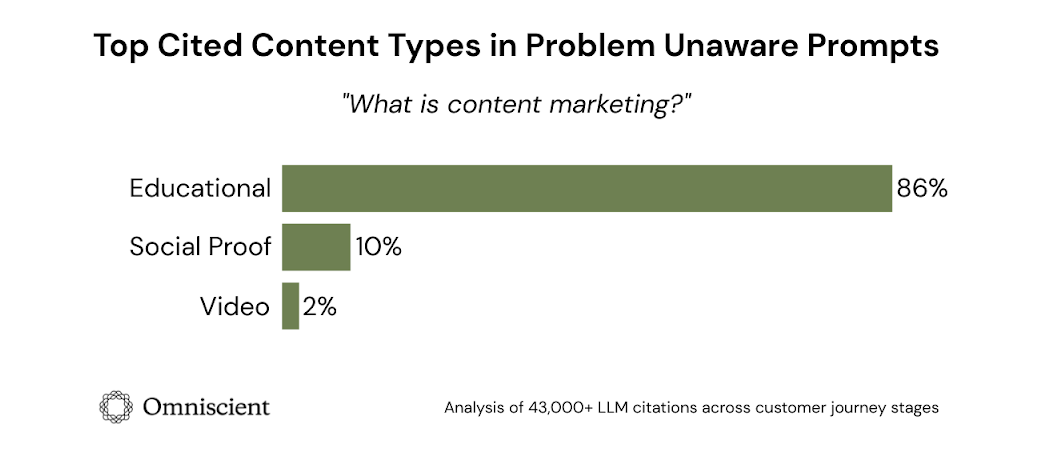

Problem Unaware: Educational content dominates AI citations

In Problem Unaware queries, the user is learning:

- Definitions

- Categories

- Mental Models

- How does this work?

It makes sense that the citations skew towards sources that teach. Education and Thought Leadership content dominates this stage, making up 86% of citations, including:

- Educational Blogs, 70.1%, like:

- Opendoor’s “What Really Determines Property Value: Complete Guide”

- How to Guides / Tutorials, 13.5%

- Sofi’s “How to Budget on a Fluctuating Income”

- Research / Trend Analysis, 1.8%

- Peer-reviewed research from journals like Arxiv

- Statistics Roundups, 0.01%

- Bankrate’s Money and Financial Stress Statistics

At the Problem Unaware stage, your brand can capitalize on the primacy effect. If a potential customer is searching for information and your brand gives them the answer, you create a lasting impression that can influence future purchasing behavior.

Social proof content (reviews, forums, listicles, case studies) shows up as the second most common category — but still rarely — only making up 10% of citations in Problem Unaware prompts.

Video comes in third at 2%, mostly from Youtube. And even here, video citations behave like educational content. Some examples of video titles cited in this stage include:

- “How to Keep Track of Your Conversations”

- “Why Your Google Ads Impressions Dropped”

In contrast, at the Solution Aware stage (more on this later), video citations include titles like “I Ranked Every Budgeting App, Here’s What’s ACTUALLY Good”, which reflects how video content shifts from educational toward social proof as you move down the funnel.

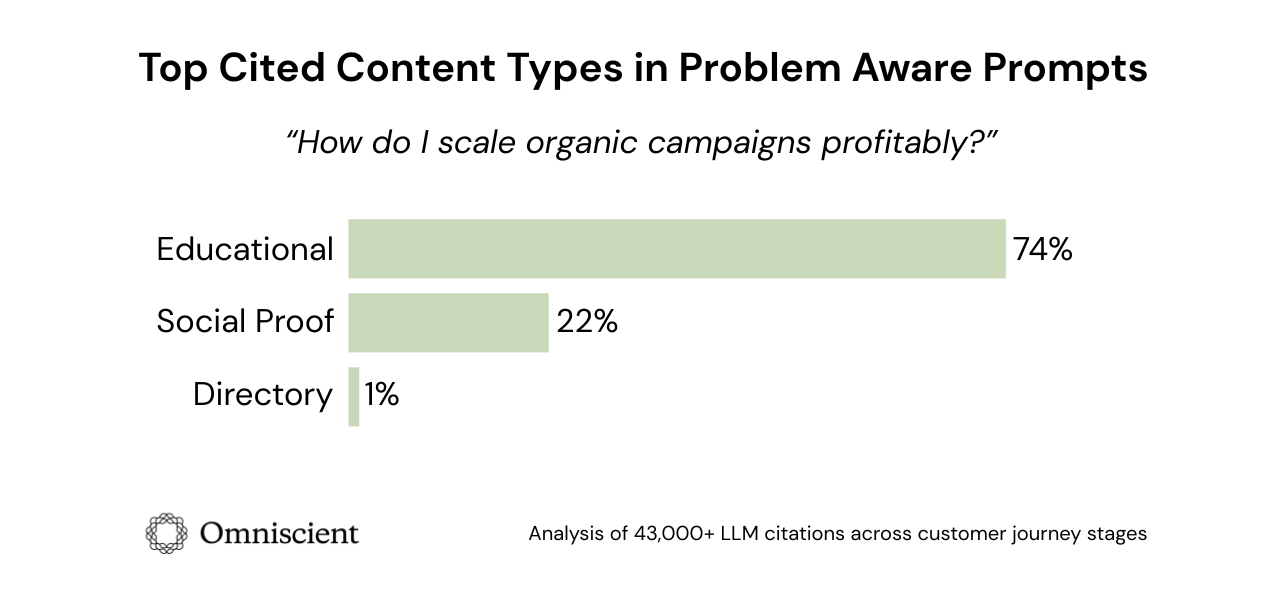

Problem Aware: Educational still leads, but social proof starts climbing

In Problem Aware queries, the user has a pain point. They aren’t comparing brands yet, but they are looking for:

- Ways to solve a problem

- Frameworks

- Early-stage evaluation criteria

Educational content still dominates here, making up 74% of total citations. That’s a slight drop from top-of-the-funnel prompts, but still clearly the majority.

Social Proof, though, more than doubles in middle-of-the-funnel prompts to 22% of citations, with the majority coming from listicles:

- Listicles, 19.3%, like:

- Zapier’s “The 11 best SEO tools”

- Forums, 1.1%

- A majority Reddit threads with others like Quora

- Review Blogs, 1.1%

- Review blogs like “Insightly CRM Review” and review sites like Capterra’s user-generated “Reviews of Chase Software”

- Social Media, 0.9%

- LinkedIn, Lemon8, Instagram, Facebook

- Case Studies, 0.03%

- AIOSEO’s Maesta Case Study

That suggests that when a buyer expresses pain, LLMs begin pulling from sources that validate the experience:

- Other people describing the same problem

- Outcomes and results

- Proof that something works in practice

Directory pages like those published by Clutch and DesignRush show up third, but only make up 1% of citations in the Problem Aware stage. Directory content increases slightly to 2% in the final stage (Solution Aware), suggesting LLMs rely on directory-style information more as the buyer gets closer to purchase.

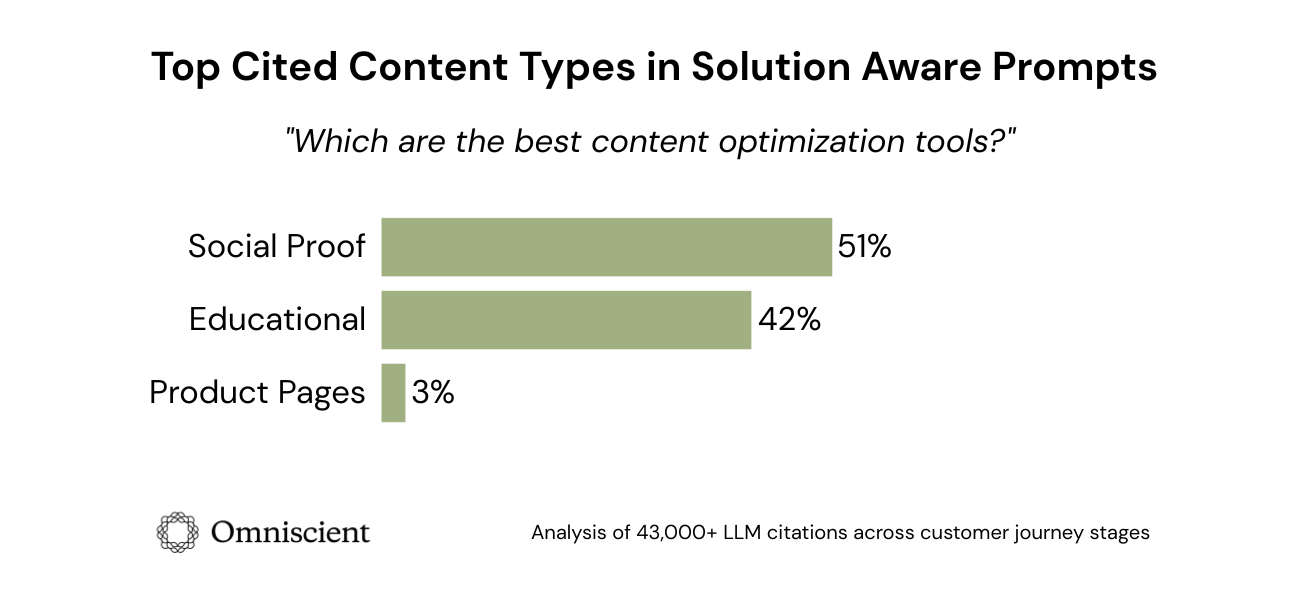

Solution Aware: Social proof becomes the primary citation source

In Solution Aware prompts, the buyer is essentially asking:

- What should I buy?

- Who is winning the category?

- What do people recommend?

More than half (51%) of all LLM citations for Solution Aware queries point to social proof. At the bottom of the funnel, that looks like:

- Listicles, 47.3%

- Forums, 2.1%

- Reviews, 1.5%

- Social Media, 0.4%

Social proof gives a very high trust signal for buyers (we learned this in our previous research on buyer behavior in LLMs). It’s the kind of information that communicates what the buyer wants to know, and it’s perceived to have lower bias than brand-owned messaging. That’s why listicles account for such a large portion of this content type: they directly map to the buyer’s intent.

Even in Solution Aware prompts, educational content still makes up 42% of citations.

Informational posts and pages don’t stop earning LLM citations at the bottom of the funnel; they just lose their monopoly.

Product pages make an appearance in third place, indicating that when a customer is asking for a solution, LLMs get information about functionality from these pages.

Methodology

I created 180 total LLM prompts, with 60 queries tailored to each customer journey stage: Problem Unaware, Problem Aware, and Solution Aware.

To reduce category bias, I ensured equal representation across industries:

- B2B SaaS: SEO, CRM Tools, and Cybersecurity

- Consumer Products: Electronics, Beauty, and Fitness

- Professional Services: Consulting, Real Estate, and Legal

- Finance: Personal Finance, Credit Cards, and Insurance

Using Peec AI, I collected 43,282 total AI citations across 5 different large language models.

Then I used natural language processing in Python to categorize citation sources into these seven content types:

1. Brand Foundation: Think of this as a brochure-version of your brand’s website- the pages meant to establish foundational messaging and narrative consistency.

- About Us pages, FAQs, legal and policy pages, contact pages

2. Directory Sites and Reference Pages: Places users go to search brands, showcasing functionality, differentiation, and general information.

- Directory sites, brand profiles, documentation and support pages such as software integrations and how the product or service works

3. Education and Thought Leadership: Owned and earned content meant to teach, persuade, and build authority.

- Blogs, how-to guides, research, trends and stats roundups

4. News and Press: Brand updates for public record.

- Funding announcements, leadership changes, software updates, and press releases

5. Product Pages and Commercial Intent: Pages that drive purchase decisions and remove friction.

- Product descriptions, e-commerce product pages, pricing pages, campaign landing pages

6. Reviews and Social Proof: Content that validates whether a brand is actually worth it.

- Customer reviews, listicles, forums, social media, case studies

7. Video: A separate, high-effort format, assumed to overperform in AI search by the industry.