In 2026, AI engines are a primary interface for customers learning about brands and their products or services.

From Omniscient’s buyer behavior research, we learned 42% of B2B decision-makers use an LLM in the first step of the buying process.

That comes in many forms: comparing competitors, researching pricing, or evaluating whether a product is worth it. Other times, it’s asking what customers think, whether a brand is trustworthy, or how well a product actually works.

In those moments, users aren’t always going directly to the source (the brand website) or scrolling review sites; they use an AI engine to synthesize the best available information.

Marketers must maintain strong brand visibility, brand perception, and ensure accurate information in every place their customers are searching.

This raises a more uncomfortable question for brands: when AI answers questions about you, whose version of your story is it telling?

In other words, when users explicitly mention a brand in an LLM query, where does the model actually get its information?

I analyzed 23,000+ citations across hundreds of branded queries to understand which sources LLMs rely on most when citing their answers to brand-specific questions.

Methodology

I used Peec AI, an AI visibility platform, to run this analysis. Peec takes prompts of my selection and runs them through multiple AI engines (ChatGPT, Perplexity, Gemini, AI Mode, and AI Overviews), returning both the LLM output and the clickable citation sources referenced in each response.

I imported 240 unique prompts into Peec, each containing a specific brand name. Because every prompt was queried across multiple AI models and produced multiple citations per output, the dataset totaled 23,387 unique sources.

The prompts were evenly distributed across:

Four industries

- B2B SaaS

- Consumer Products

- Professional Services

- Finance

Six user intent types

- Competitor Comparison: “Which is a better CRM for mid-sized SaaS teams: HubSpot or Salesforce?”

- Customer Reviews: “What do customers say about Hoka? Are reviews generally positive or negative?”

- Direct Evaluation: “Is Chase a good choice for small business banking?”

- Functionality / Integrations: “Does Rare Beauty makeup include liquid blush products?”

- Proof / Evidence: “What evidence or real-world results support Bain for management consulting?”

- Purchasing / Pricing: “What fees does Square charge?”

After collecting the outputs, I analyzed the citation sources referenced in each response, using Python to categorize them into:

1. Owned Content

Any content coming from a domain that matches the brand mentioned in the query

2. Earned Media

Third-party content not published by a commercial brand, including:

- Directory / Reference Sites: Wikipedia, Product Hunt, job sites like Built In, and similar reference hubs

- Editorial / Independent Media: Publications like the New York Times, TechCrunch, GQ, or industry blogs that exist to inform rather than sell

- Forums / Social Media: User-generated content and open platforms like Reddit, X, and community forums

- Review Sites: Centralized review platforms such as G2, Capterra, and TrustRadius

3. Commercial Brand Content

Any content published by a brand that is not the brand named in the query

This includes listicles, comparison blogs, case studies, and reviews, written by a direct competitor or another brand with its own commercial incentives.

For example:

- Convert publishing a case study about Growth Hit

- Alibaba publishing a “CeraVe vs La Roche-Posay” comparison blog

Even when the publisher isn’t a direct competitor, the content is still brand-authored rather than independent.

While citations don’t fully capture internal model weighting, they represent the sources LLMs choose to surface and legitimize in user-facing answers.

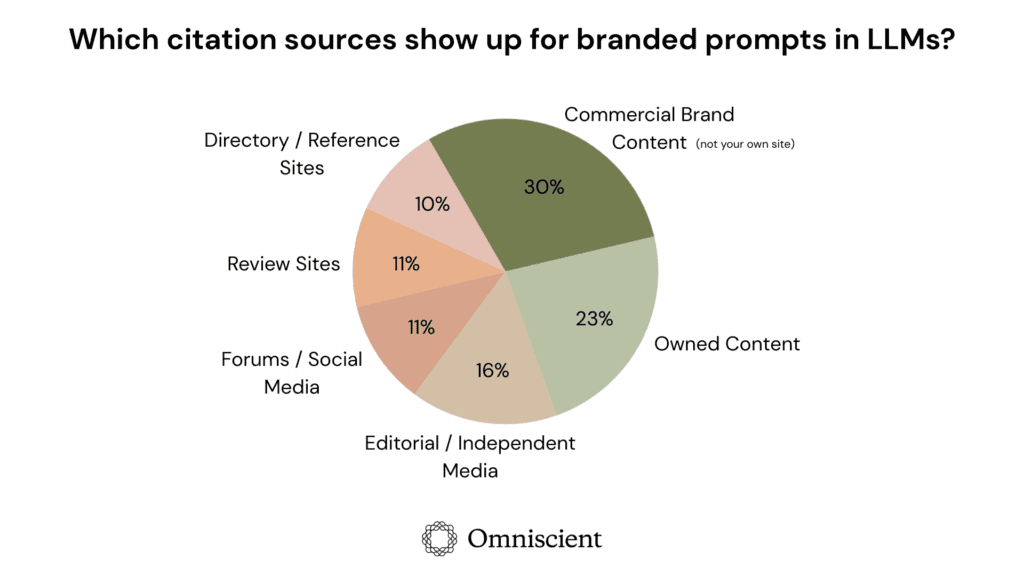

Off-page content dominates branded LLM citations

When a user mentions a brand by name in their LLM query, earned media sources account for nearly half (48%) of all citations.

Breaking that down:

- 16% come from editorial sites and independent media

- 11% from forums and social media platforms

- 11% from review sites

- 10% from directory or reference sites

The next most common category is commercial brand content, which makes up 30% of all citations in branded queries.

That leaves owned brand content with the smallest share, just 23% of total citations.

This share varies by brand maturity and content footprint, but the aggregate trend holds across industries.

When someone asks an LLM about your brand, most of what it references comes from outside your website.

What this looks like in practice:

Input:

“Which is better for eczema and sensitive skin: CeraVe or La Roche-Posay?”

(Intent: Competitor Comparison)

Output:

“Both CeraVe and La Roche-Posay are highly recommended … the ideal choice depends on your specific skin needs…”

Sources cited:

- Alibaba (Commercial Brand Content)

- Reddit (Forums / Social Media)

- Dermatology Times (Editorial / Independent Media)

- La Roche-Posay.com (Owned Content)

One query, four citations. Each from a different category, all contributing to how the LLM frames the answer.

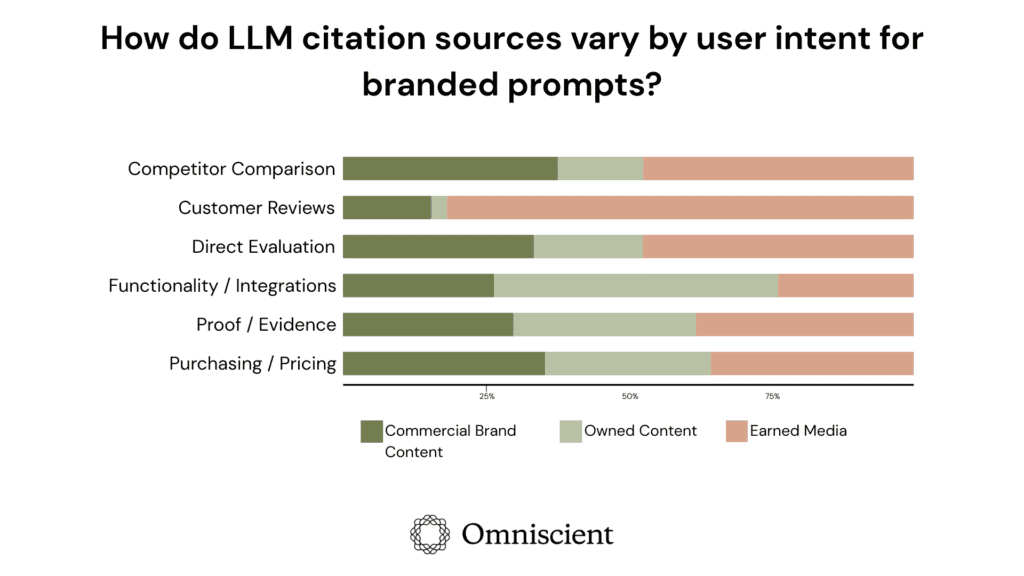

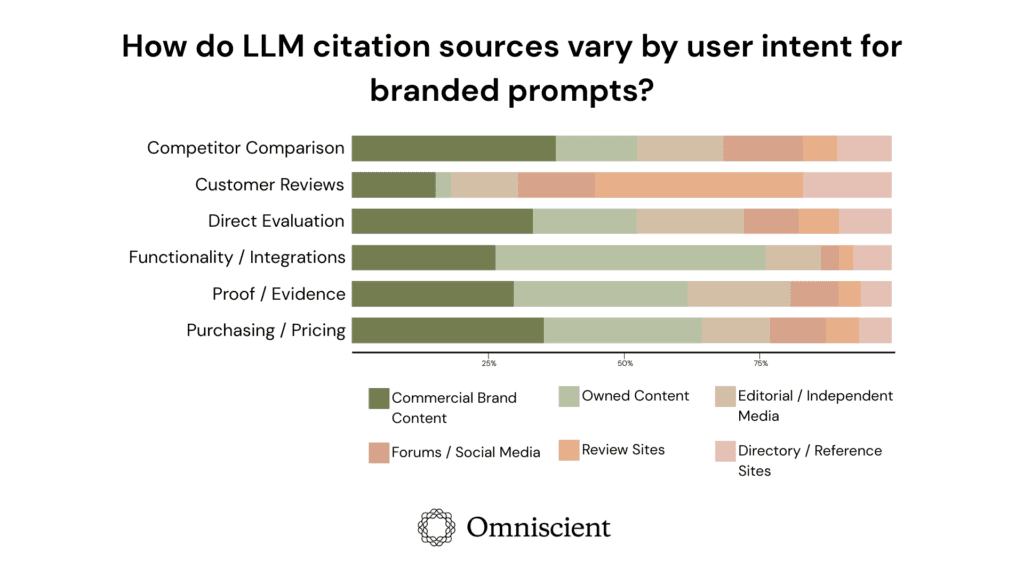

Citation sources vary by search intent

(See alternate graph below for a further breakdown of citation sources)

While off-page content dominates overall, citation patterns change meaningfully based on what the user is asking about a brand.

- Earned Media dominates in customer review queries.

When users ask what customers think, LLMs overwhelmingly cite earned media sources (82% of the time).

For example: “What do customers say about Hoka? Are reviews generally positive or negative?”

LLMs most often cited TrustPilot, Reddit, and social platforms like Lemon8, not Hoka’s own site.

- Owned Content performs best for functionality and integrations.

When users are looking for factual product information, owned content appears the most frequently at 50% of the time.

In queries like: “Does Rare Beauty include liquid blush products?”

The LLMs most often cited Rare Beauty’s owned product and category pages.

- Commercial Brand Content is cited fairly evenly across all user intent groups.

Below, you can check out the citation breakdown in further depth than the three categories above.

What this means for brand visibility in AI

When a user asks an AI engine for information regarding a specific brand, the LLMs rarely rely on the brand’s own narrative alone.

Brand visibility in AI is governed by something deeper than SEO alone: it’s governed by brand gravity.

This research shows that LLMs don’t just pull answers from your website; they synthesize signals from across the ecosystem — earned media, reviews, community conversations, and third-party content — to decide what to surface for a given query. That’s because what matters to these models isn’t whether you own the page, but how consistently and credibly your brand is reinforced across the web.

Here are my takeaways:

- Brand information in LLMs comes mostly from off-site sources.

Even in explicitly branded queries, models synthesize information from editorial coverage, reviews, forums, and competitor-authored content to construct their answers.

- Citation behavior closely mirrors user intent.

Whether due to model design or the availability of source material, LLMs pull sources differently based on whether the user is asking for reviews, product details, comparisons, or validation.

- Brand visibility in AI is shaped by the broader content ecosystem, not a single source.

Brands are being defined in LLM outputs before users even prompt the engine: through how others talk about them, compare them, review them, and contextualize them.

Brand presence in LLM search is not only about controlling the narrative outright, but maintaining consistency and truth in earned and influenced sources, because that is how LLMs represent brands in response to user questions.