The ultimate goal of search engine optimization (SEO) is to make your website more visible so people can easily find it when they search online.

But to do that, you must understand what will interest people and what they’re searching for. Public web data and proxy services are great ways to figure that out.

Let’s break down how to use public web data for SEO and how other tools, like proxy services, can help you gather key insights into your audience and industry.

Understanding types of public web data

Public web data is any information that’s freely available for anyone to access online. Think of it as the public library for the Internet.

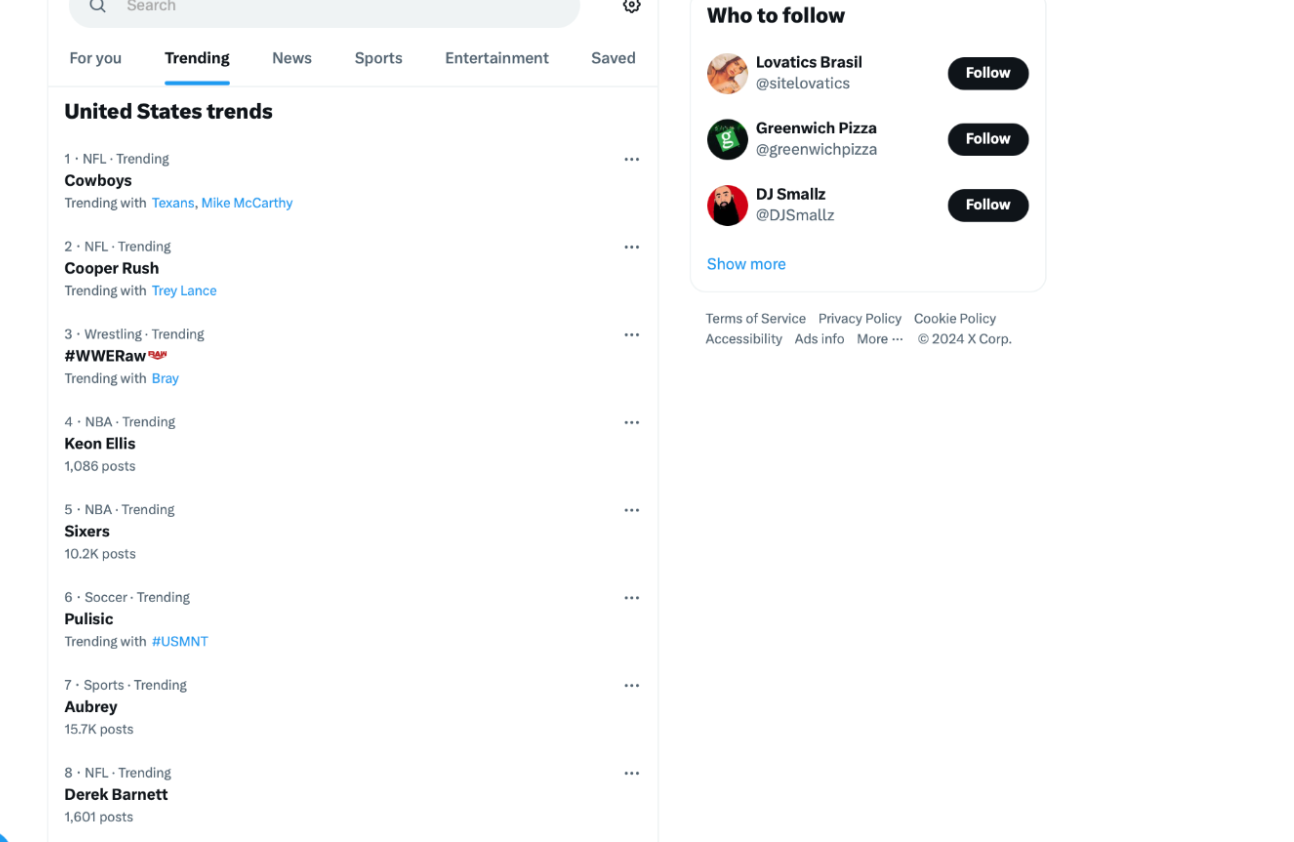

It offers valuable insights you can use, and you don’t need special permission to explore it. For example, when you see trending hashtags on X, read an online news article, or look up a product review, you’re accessing public web data.

It comes from a variety of sources, such as:

Social media

Social media platforms are where real-time conversations, trends, and consumer preferences are on full display.

Publicly available data from social media can help you stay on top of hot topics in your industry.

For SEO, this data helps you:

- Identify trending topics to create timely and relevant content.

- Understand audience language and sentiment to refine keyword targeting.

- Monitor competitors’ social activity for potential content gaps or new opportunities.

For example, you can track trending hashtags around seasonal events or industry buzzwords to inform blog topics or meta descriptions that align with what your audience is actively looking for.

News articles

News sites often rank high in the search engine results pages (SERPs)—and for good reason. They provide a pulse on current events, industry changes, and the most trending topics.

So, they’re a great source of emerging trends and frequently searched keywords.

For SEO:

- Use news articles to discover hot topics you can repurpose into evergreen content.

- Extract commonly cited statistics or expert opinions to add credibility to your articles.

- Identify breaking stories that could inspire timely blog posts, press releases, or FAQs.

Tools like Google News Alerts can help you stay on top of relevant stories so your SEO strategies stay dynamic and topical.

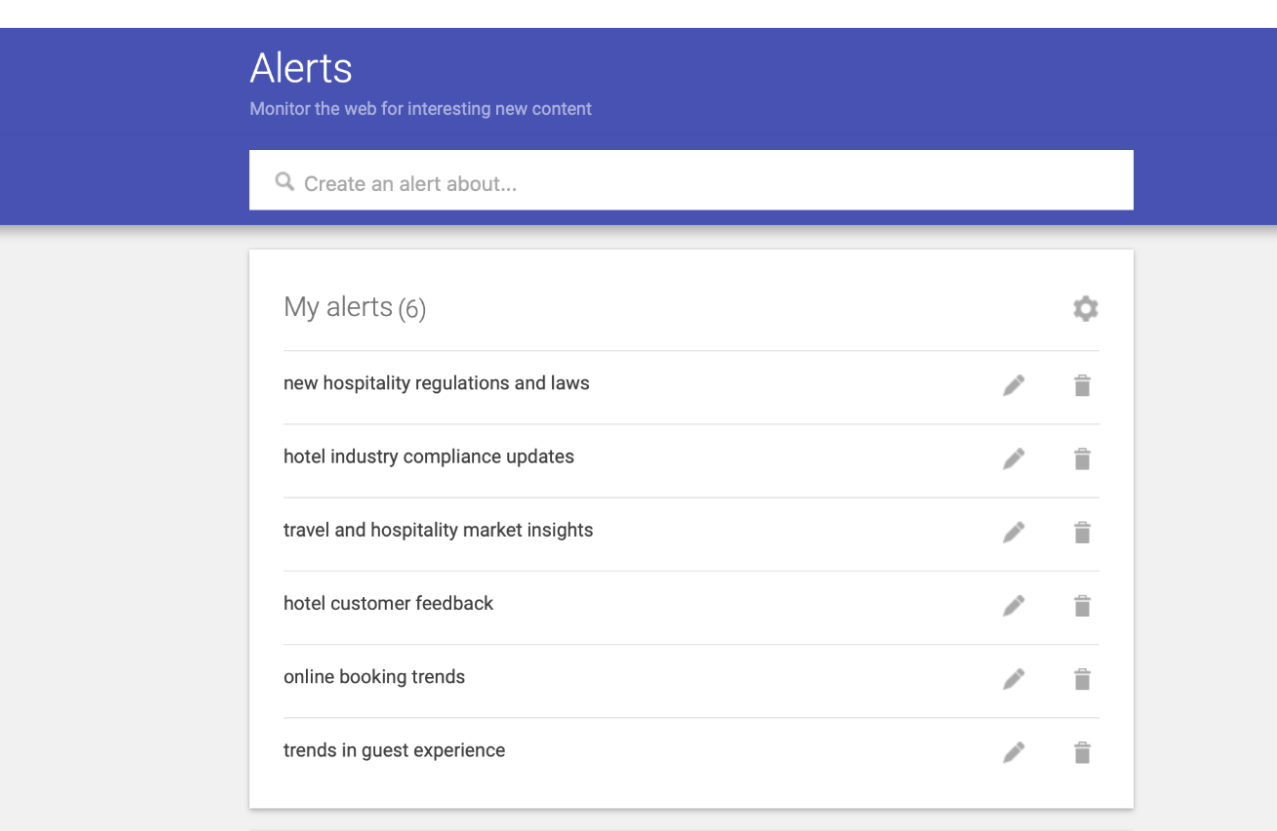

Look at this example of Google Alerts for a hospitality company.

Screenshot provided by author

Government databases

Government databases are also publicly accessible sources of web data. They often provide high-quality, credible information, such as census data, economic statistics, and geographic data.

This type of data can be a goldmine for local SEO, industry reports, and data-driven content strategies.

For SEO:

- Create highly shareable, eye-catching infographics or reports based on government statistics to earn backlinks and social shares.

- Optimize local SEO strategies using geographic and demographic data.

- Develop content targeting niche audiences based on trends in government publications.

For example, if you’re a law firm, you could write a blog post highlighting local crime rates to position your firm as a thought leader while improving local search rankings.

Academic research

Universities and research institutions often release academic research papers, journals, and studies that have lots of credible information. You can use these sources to lend authority to your content.

Or, you can use them as a source of inspiration for building long-form, high-quality blog posts or whitepapers.

For SEO:

- Reference academic studies to create authoritative and in-depth content.

- Identify long-tail keywords from academic research titles and abstracts.

- Develop case studies or comparative analyses using data-backed insights.

Google Scholar and institutional repositories are great starting points for finding academic research relevant to your niche.

Reviews and ratings

Consumer reviews and ratings on platforms like Amazon, Yelp, and Google Reviews give you direct insight into customer experiences and preferences.

Leverage this data to understand pain points and uncover unique value propositions in your industry.

For SEO:

- Extract common themes or phrases from reviews to inform keyword targeting.

- Identify recurring customer concerns to create blog posts that address specific issues.

- Analyze competitor reviews to reveal gaps in their offerings and position your content accordingly.

For example, let’s say you read a review that highlights a specific feature—or lack thereof—in a product. You can then use that information to write a “how-to” or tutorial guide or a comparison post targeting those keywords.

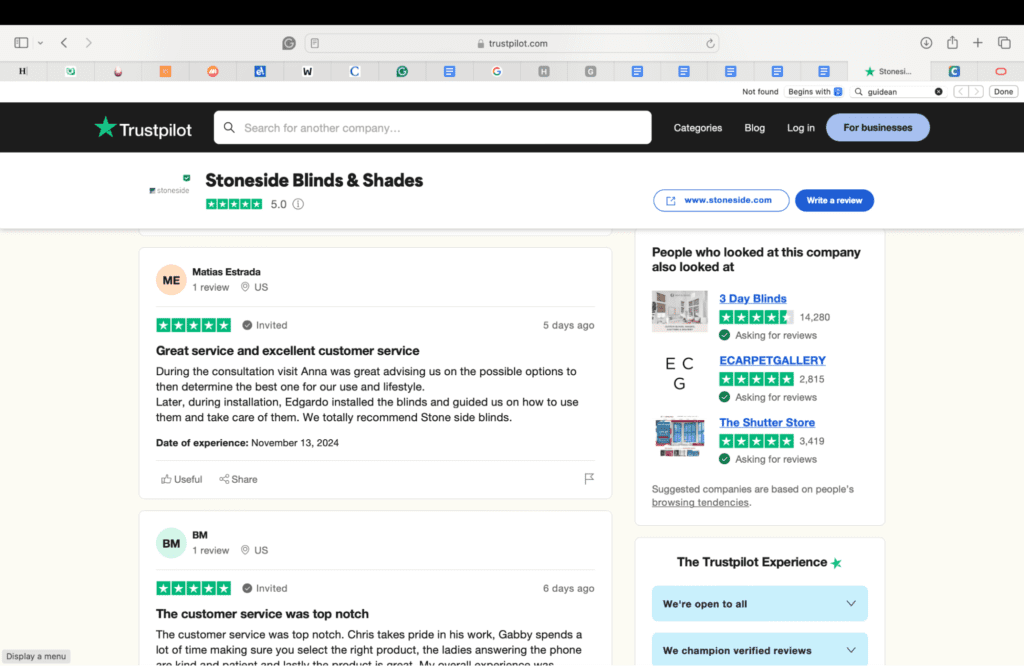

Stoneside Blinds & Shades is one of the top-rated companies on trust pilot. It can use the reviews on the site to inform its content marketing strategy.

For example, the customer who left this review enjoyed the fact that the rep from Stoneside Blinds & Shades guided them throughout the entire process. This could inspire the company to create a blog post about the value of expert guidance when choosing window treatments.

Data sources you should know about

The web offers countless resources for accessing high-quality and free data. These sources can help you gather the insights you need to refine your SEO strategies:

Government websites

- USDA.gov (United States Department of Agriculture)

- BEA.gov (Bureau of Economic Analysis)

- SBA.gov (Small Business Administration)

- BLS.gov (Bureau of Labor Statistics)

- Census.gov

- Data.gov

Social media platforms

- X

Academic databases

- Education Resources Information Center (ERIC)

- National Bureau of Economic Research (NBER)

- Google Scholar

- Science.gov

- PubMed

Open data portals

- San Francisco Open Data Portal

- California Open Data

- Chicago Data Portal

- NYC Open Data

Industry reports and publications

- Pew Research Center

- IBISWorld

- Forrester

- Statista

- Nielsen

Review sites

- Google Reviews

- Angie’s List

- Glassdoor

- Trustpilot

- Yelp

News sites

- The Wall Street Journal

- Local news websites

- The New York Times

- Google News

- USA Today

Leveraging public web data for SEO

Have you ever wondered how top-performing websites always seem to have the best content, keywords, and structure? The secret often lies in public web data. However, for businesses that lack in-house expertise, outsourcing SEO and development tasks can be a game-changer. Instead of struggling with complex implementations, you can hire developers who specialize in optimizing your digital presence.

You can leverage it, too.

Content creation and optimization

It’s cliche. But we’ll say it anyway. Content is king. And the key to crafting content that truly resonates with your audience is understanding what they’re searching for.

Web scraping is your best friend here.

Take review sites, for example. You can read what customers love (or hate) about a product or service. This can spark ideas for blog posts, FAQs, or even detailed guides.

Maybe you’re in the food service industry. You notice people frequently mention long wait times at local restaurants. You could create a blog post about “The Best Restaurants in [City] No Reservations.”

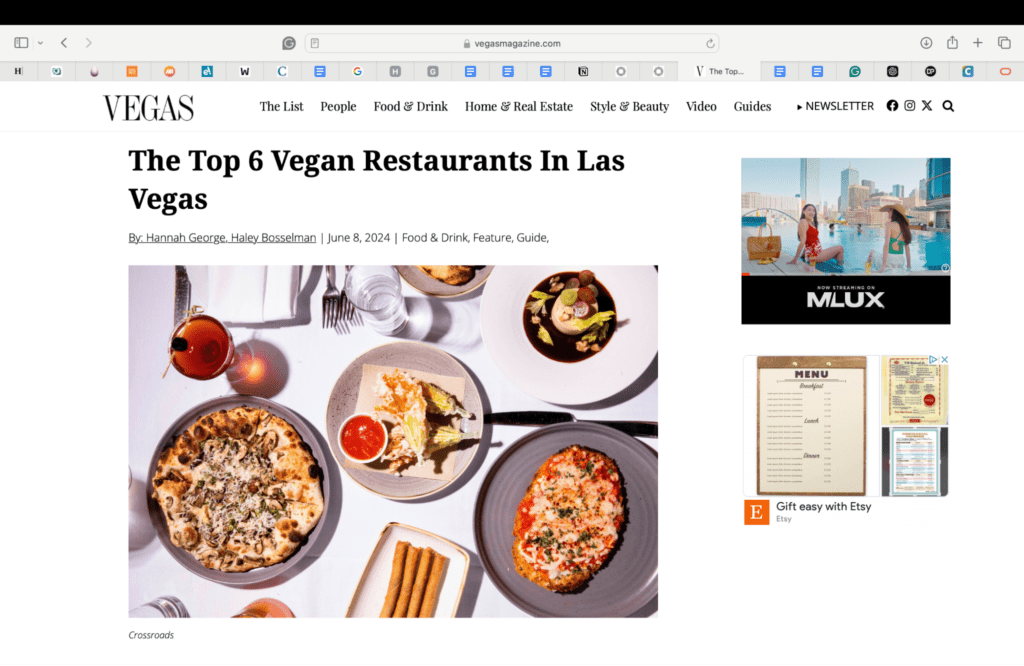

Or, you can use social media platforms like X and Pinterest to spot trends. Let’s say #VeganRestaurants is a popular hashtag. Use that as inspiration to create shareable content like “Top Vegan Restaurants in [City].”

In your content, your top priority is to address your audience’s needs and questions. Then, naturally integrate relevant keywords.

Technical SEO

Behind every great website is a well-oiled technical SEO machine.

It might not be the flashiest part of SEO. But it’s important to make sure search engines can crawl, index, and rank your site properly.

SERP data can help you identify trends, address challenges, and improve your technical SEO strategy.

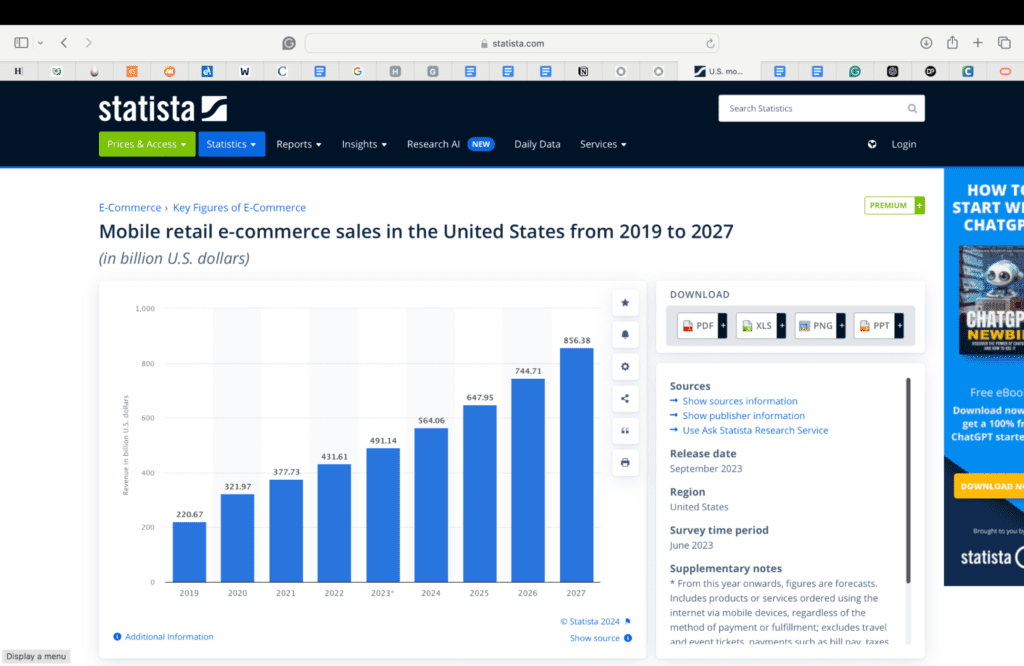

For example, you can use sites like Statista to uncover key changes in your industry. If you’re in the e-commerce industry, you might learn about an increase in mobile shopping trends.

So, you prioritize mobile-first indexing and Accelerated Mobile Pages (AMP) to improve the user experience (UX) on mobile devices.

Structured data is another technical SEO aspect to focus on. Public data can help you create the right schema markup.

Open datasets from sources like NYC Open Data often come in JSON or XML formats, which you can repurpose to build rich snippets.

For example, if you own a local restaurant, you can use public data about food safety standards to create informative FAQ pages, complete with FAQ schema markup. Or, real estate businesses can pull housing market data from government portals to populate schema-enhanced listings.

Adding schema helps search engines better understand your content, which can lead to higher visibility in search.

Local SEO

If you’re trying to attract customers, public web data can give you a serious leg up.

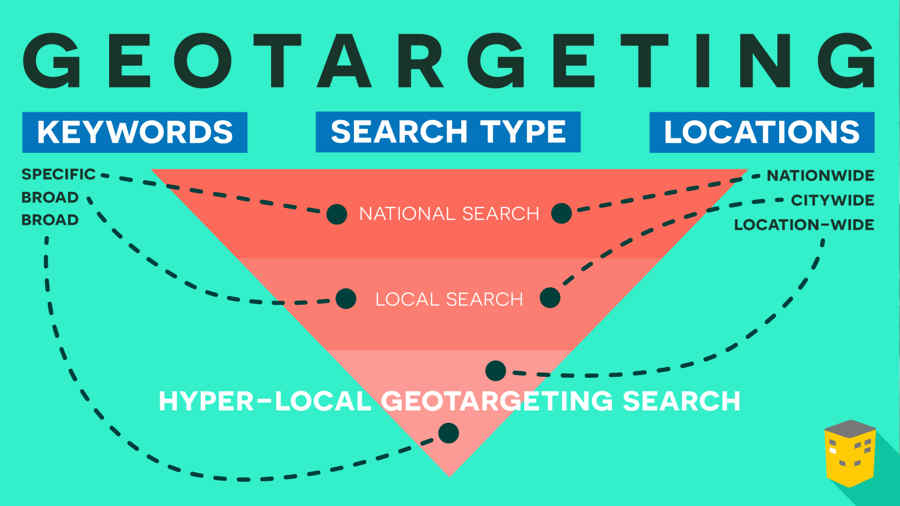

When you optimize your site for local search, it increases your chances of it showing up in front of users who are searching for businesses like yours nearby. And the right data can help you nail that.

For starters, local government websites like NYC Open Data or Chicago Data Portal offer insights about community events, popular attractions, and economic trends. Use this information to create hyper-relevant content. For example, if your city is hosting a marathon, you could write a blog about “The Best Post-Marathon Eats in [City].”

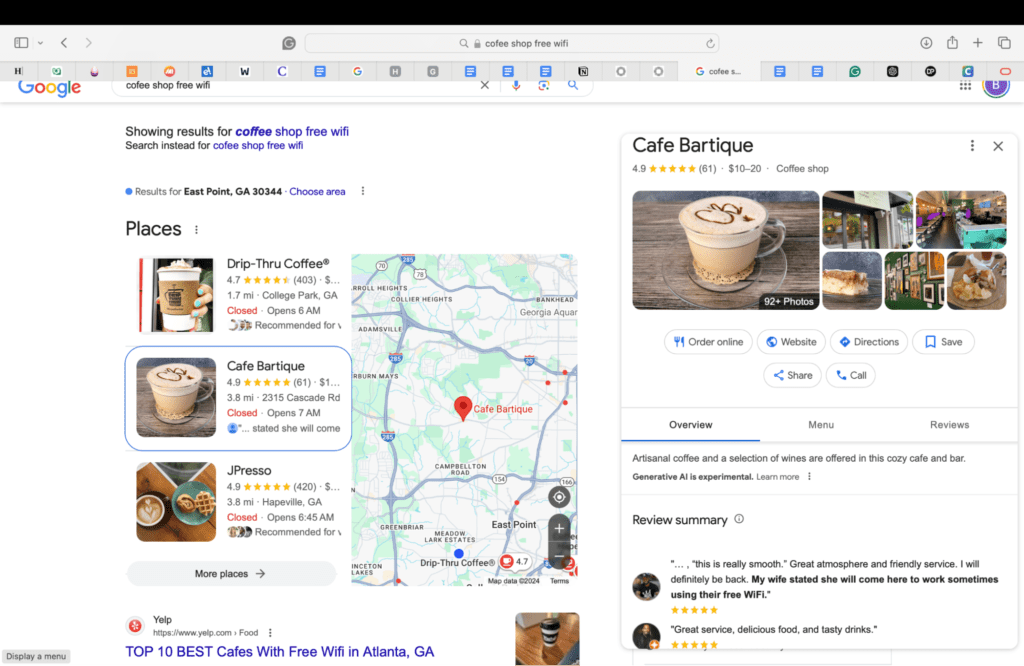

You can also use review platforms for local SEO. Comb through reviews to identify what customers value most. If people rave about your coffee shop’s free Wi-Fi, emphasize it in your Google Business profile and sprinkle it into your site copy.

Screenshot provided by author

And don’t forget about social media. Tools like Instagram’s location tags or trending hashtags can give you clues about what’s popular in your area. This helps you create content that connects with local audiences.

Enhancing data collection with proxy services

Proxy services can be a valuable resource when collecting data for SEO or market research.

A proxy acts as a middleman between your device and the internet.

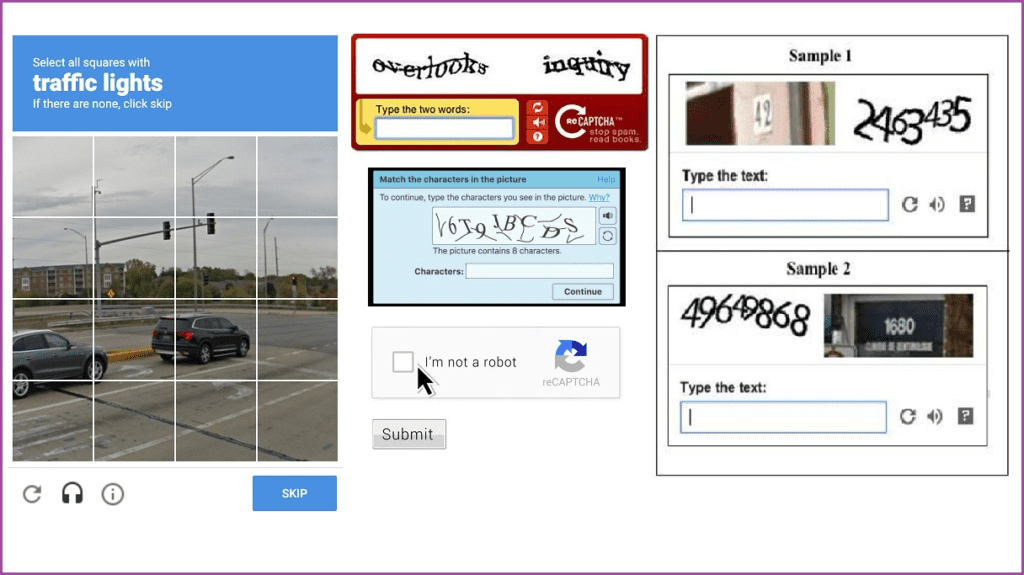

When you use a proxy server, your requests to access websites or data go through a separate server, which assigns a different IP address to your connection. This masks your real IP address. That way, you can stay anonymous and bypass restrictions, such as geo-blocking, rate limits, or captchas.

Proxy services are great for tasks like data scraping, SEO monitoring, competitive analysis, or improving online privacy.

When analyzing web traffic patterns through proxy services, we see interesting trends in how healthcare providers have adapted their digital presence. For instance, providers offering phentermine online through licensed telehealth platforms have successfully used data-driven content strategies to serve localized patient needs while maintaining HIPAA compliance.

By leveraging public web data to understand search patterns and patient concerns, these platforms demonstrate how combining analytics insights with proper technical SEO can strengthen both patient education and visibility in relevant searches. However, when leveraging such public data, it’s important to explore the differences between web filtering vs content filtering to ensure compliance with data privacy regulations and maintain the accuracy of the information.

Overcoming IP restrictions and rate limits

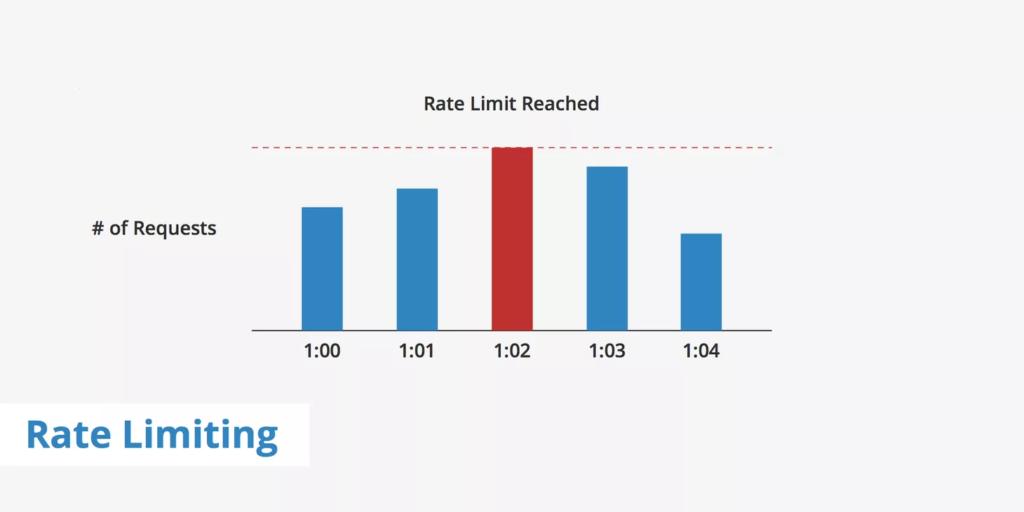

One of the biggest headaches in data scraping is running into IP restrictions or rate limits.

Websites often block repeated requests from the same IP address. But residential proxies, datacenter proxies, or mobile proxies rotate IP addresses. This means they make it look like your requests are coming from different users in different locations.

For example, residential proxies use real, geo-located IP addresses from ISPs, which makes them seem legitimate to websites. This boosts your success rate when accessing data.

SEO proxies are great for search engine activities. They help you overcome rate limits on search engines like Google without triggering captchas.

Many web scraping tools like Bright Data offer proxy networks with high uptime so that your data collection efforts run smoothly without interruptions.

Bright Data provides an extensive proxy network and powerful scraping capabilities, enabling you to gain actionable insights into competitor activities, monitor keyword trends, and stay informed about market shifts.

This data-driven approach allows you to make more strategic decisions, enhancing your website’s visibility and overall SEO performance. By tapping into these tools, you can elevate your efforts, refine content strategies, and stay ahead in a competitive digital landscape.

Protecting privacy and security

Web data collection is a common use case for proxy servers. It also calls for higher security standards.

Using a reliable proxy helps you reduce the chances of a website blacklisting or rate-limiting your IP address.

For example, when monitoring competitors’ websites for pricing strategies, keyword usage, or backlink profiles, use proxies to gather insights without revealing your identity or intentions.

Proxies also help you bypass anti-scraping mechanisms, such as IP bans or CAPTCHA challenges, without exposing the origin of the data requests.

Enhancing data collection efficiency and scalability

Your SEO strategy depends on efficient and scalable web data collection. Why? Because you need consistent access to accurate web data.

Proxy solutions, including residential proxies like Nodemaven, help you collect large volumes of public data without triggering rate limits or IP bans.

By distributing requests across a pool of IP addresses (also called “rotating proxies”), proxies simulate organic traffic.

For example, when you’re performing keyword research, scraping search engine results, or analyzing competitors’ profiles to find backlinks, you can gather data seamlessly. This is even true for highly competitive or geo-restricted keywords.

You can use geographic proxies for localized SEO. They provide access to search results and user behavior data specific to different regions.

Proxies can also enhance scalability because they allow you to manage data collection for multiple campaigns you may be running at the same time.

Whether you’re tracking hundreds of competitor sites or collecting real-time SERP data across various markets, a proxy provider supports the automation and scaling of these tasks.

Harnessing public web data for SEO to boost your business

Now, you know how to use public web data for SEO. It’s there waiting for you to unlock key insights about your business, industry, product, and customers.

With so much information available, you can fine-tune your SEO strategies and gain a competitive edge.

Of course, there’s a caveat. Accessing this valuable data isn’t always as simple as it seems. Many websites and platforms use measures to prevent scraping.

Proxies can help you overcome those obstacles. You can collect data without exposing your identity or risking blocks. So you can achieve seamless data collection at scale.

Need help creating an SEO strategy that actually gets results? Omniscient can help. Book a strategy call today.

Author bio:

Kelly Moser is the co-founder and editor at Home & Jet, a digital magazine for the modern era. She’s also the content manager at Login Lockdown, covering the latest trends in tech, business, and security. Kelly is an expert in freelance writing and content marketing for SaaS, Fintech, and e-commerce startups.